One of the cool new features in SQL Server 2014 is delayed durability (available in all Editions), which is described in detail in Books Online here.

I think I’m going to see a lot of people turn this on, as you can get a profound increase in transaction throughput with the right workload. However, I also think a lot of people are going to turn this on without realizing the potential for data loss and making the appropriate trade off.

Why can it give a throughput boost?

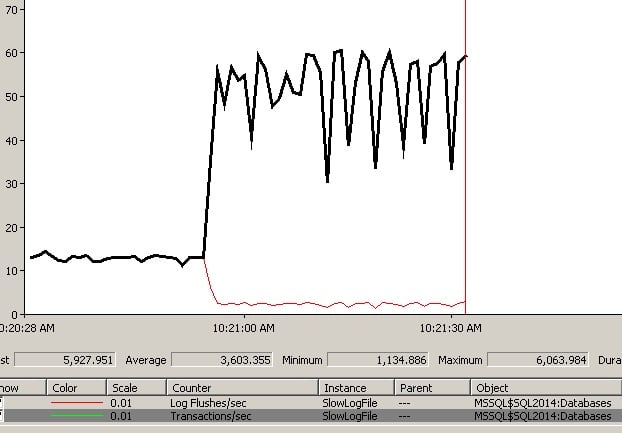

I put together a contrived workload with a small table where 50 concurrent clients are updating the same rows, and the database log is on a slow I/O subsystem. Here’s a graph showing my test:

At the obvious change point, that’s where I enabled delayed durability, with all transactions being forced to use it. Before the change, the number of Transactions/sec is equal to the number of Log Flushes/sec, as each transaction is holding locks that block all other transactions (I told you it’s a contrived workload). So why the profound jump in Transactions/sec when I forced delayed durability?

Under normal circumstances, when a transaction commits, the commit doesn’t complete until the log block (see this blog post for more details) containing the LOP_COMMIT_TRAN log record for the transaction has been flushed to disk and the write is acknowledged back to SQL Server as having completed, providing the durability of the transaction (the D in the ACID properties of the transaction). The transaction’s locks cannot be dropped until the log flush completes.

In my workload, all the other transactions are waiting for the one that is committing, as they all need the same locks, so Transactions/sec is tied to Log Flushes/sec in this case.

With delayed durability, the transaction commit proceeds without the log block flush occurring – hence the act of making the transaction durable is delayed. Under delayed durability, log blocks are only flushed to disk when they reach their maximum size of 60KB. This means that transactions commit a lot faster, hold their locks for less time, and so Transactions/sec increases greatly (for this workload). You can also see that the Log Flushes/sec decreased greatly as well, as previously it was flushing lots of tiny log blocks and then changed to only flush maximum-sized log blocks.

Note:

- I was forcing all transactions to be delayed durable, but the facility exists to make the delayed durability choice per transaction too (see Books Online for more details).

- There’s a bit more to the log block flushing too: under delayed durability, a log block will flush when it fills up, or if a non-delayed durable transaction commits, or if the new sp_flush_log proc is executed.

My good friend Aaron Bertrand over at SQL Sentry has a long post about delayed durability that looks into its performance implications in a little bit more depth so I recommend you check out his post as well.

So this looks great, for the right type of workload. But I bet you’re thinking:

What’s the catch?

Your transactions aren’t durable when they commit. Simple.

Now you may be thinking that if the system crashes, the most you’re going to lose is up to 60KB of transaction log. Wrong. If that last log block contains the LOP_COMMIT_TRAN log record for a long-running transaction, when the system crashes, and that log block isn’t on disk, that whole transaction will roll back during crash recovery. So the potential for work/data loss is greater than just 60KB.

And there’s more:

- Log backups will not back up that unflushed log block, as it’s not on disk, so non-durable committed transactions may not be contained within a log backup.

- Non-durable transactions that have committed are not protected by synchronous database mirroring or a synchronous availability group either, as these rely on log block flushes (and transmission to the mirror/replica).

For critical transactions, an sp_flush_log can be used, or per-transaction delayed durability used instead.

So the million-dollar question is:

Should I enable delayed durability?

It depends. Is your business comfortable making the throughput vs. durability trade off? Does enabling it give a throughput boost? If yes to both, go ahead. If no to either, don’t enable it. That’s a very simplistic way of thinking about it, but that’s what it boils down to really.

There are lots of other things you can do to increase the throughput and performance of the transaction log, and I explained them in a blog post series:

- Trimming the Transaction Log Fat

- Trimming More Transaction Log Fat

- Transaction Log Configuration Issues

- Transaction Log Monitoring

As I stated above though, I think a lot of people are going to be wowed by the throughput boost (if their workload benefits) from delayed durability and see this as a no-brainer, without considering the potential for data loss.

Tempting, isn’t it?

The post Delayed Durability in SQL Server 2014 appeared first on Paul S. Randal.